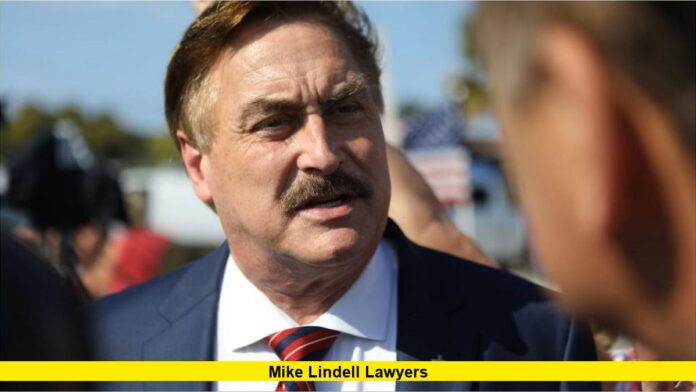

The legal world is buzzing after Mike Lindell’s legal team was penalized for misusing AI in court documents, resulting in a jaw-dropping $6,000 in fines and a scathing judicial rebuke that’s sending shockwaves through the legal community.

The AI Legal Disaster That Has Everyone Talking

In what’s being called one of the most embarrassing legal AI failures to date, two attorneys representing MyPillow CEO Mike Lindell were fined $3,000 each on Monday for filing a motion riddled with AI-generated errors. The case has become a cautionary tale about the dangers of blindly trusting artificial intelligence in legal proceedings.

Federal Judge Nina Y. Wang didn’t hold back in her criticism, describing the attorneys’ actions as “blind reliance on generative artificial intelligence” and threatening them with the highest level of sanctions, including potential referral to their state bars for disciplinary proceedings.

The Shocking Details: 30 Fake Citations and Nonexistent Cases

The scope of the AI-generated disaster is staggering. Lindell’s legal team confessed to submitting a brief with “nearly 30 defective citations”, with many referencing cases that simply don’t exist. The AI-generated filing was described as “rife with errors and fake citations” that could have seriously misled the court.

What Went Wrong:

- Fake legal precedents: AI created citations to nonexistent court cases

- Fabricated legal authorities: References to laws and rulings that never happened

- Procedural violations: Failure to verify AI-generated content before filing

- Professional misconduct: Violating basic legal standards and court rules

Read Also-Mike Lindell’s Legal Team Faces Scrutiny Over AI-Generated Court Briefs

The Attorneys’ Defense Falls Flat

Attorney Christopher Kachouroff claimed that he “personally outlined and wrote a draft of a brief before utilizing generative” AI, but the legal team’s explanation that the document was submitted by mistake due to “human error” failed to impress the judge.

Despite admitting to using AI and stating “There is nothing wrong with using AI when properly used,” the attorneys’ defense couldn’t overcome the magnitude of their oversight.

Why This AI Legal Scandal Matters

This incident represents a watershed moment for AI use in legal practice. The judge’s order is being described as “a wake-up call for attorneys who use frontier generative artificial intelligence (Gen AI) models in legal research”.

The case highlights several critical issues:

Legal Profession Credibility: When attorneys submit fabricated citations, it undermines the entire judicial system’s integrity.

AI Hallucination Risks: This case demonstrates how AI can “hallucinate” or create convincing but completely false information.

Professional Responsibility: Lawyers have a duty to verify all information before submitting it to courts.

The Broader Impact on Legal AI Use

The error-filled motion was filed in a defamation lawsuit against Mike Lindell, which ended last month with a jury finding him liable for false claims about the 2020 election. This timing couldn’t be worse for Lindell, who’s already facing significant legal and financial challenges.

Industry Response:

- Law firms are now scrambling to implement AI verification protocols

- Bar associations are considering new guidelines for AI use in legal practice

- Legal technology companies are emphasizing the need for human oversight

What This Means for the Future of Legal AI

The judge called the nearly 30 defective citations “troubling”, and this sentiment is echoing throughout the legal community. This case will likely become required reading in law schools and mandatory training for practicing attorneys.

The incident serves as a stark reminder that while AI can be a powerful tool for legal research, it requires:

- Rigorous fact-checking and verification

- Human oversight at every stage

- Clear protocols for AI-assisted legal work

- Ongoing education about AI limitations

The Viral Reaction and Social Media Buzz

The story has exploded across social media platforms, with legal experts, journalists, and the general public sharing their reactions. The combination of Mike Lindell’s controversial reputation and the spectacular nature of the AI failure has created perfect viral storm conditions.

Key viral elements:

- Celebrity defendant with existing controversies

- Cutting-edge technology gone wrong

- Professional embarrassment on a grand scale

- Clear consequences and accountability

Lessons Learned and Moving Forward

This case will undoubtedly become a landmark example of how not to use AI in legal practice. The MyPillow CEO’s lead counsel filed an error-riddled court document authored by a “hallucinating AI,” according to the judge.

The $6,000 fine may seem modest compared to the reputational damage and potential long-term consequences for the attorneys involved. This incident will likely follow them throughout their careers and serves as a cautionary tale for the entire legal profession.

The Bottom Line

Mike Lindell’s legal team’s AI disaster represents a perfect storm of technological overreliance, professional negligence, and high-profile embarrassment. As the legal world grapples with integrating AI tools, this case will serve as a sobering reminder that artificial intelligence is only as good as the humans who use it.

The $6,000 penalty is just the beginning – the real cost will be measured in damaged reputations, increased scrutiny of AI use in legal practice, and the precedent this sets for future cases involving AI-generated legal documents.

What’s your take on this AI legal disaster? Should lawyers be banned from using AI, or do we need better safeguards? Drop your thoughts in the comments below and share this story with anyone who needs to see what happens when AI goes wrong in the courtroom!