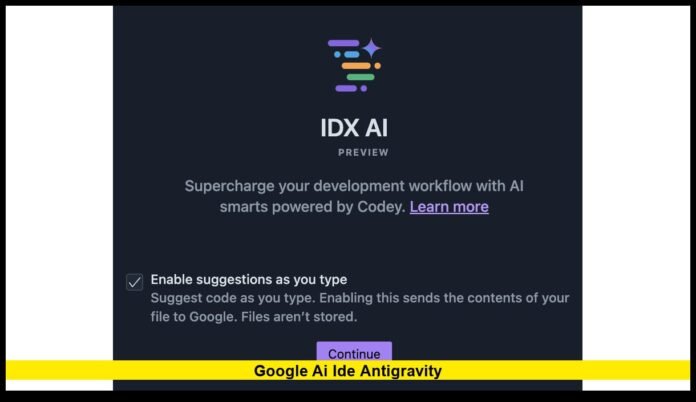

The Google AI IDE Antigravity platform is now shaping the conversation across the tech world after Google released its public preview in mid-November 2025. Within days of the announcement, developers across the United States began exploring what this new environment means for the future of software creation. The early response has been strong, with many calling it the most ambitious shift in coding workflows that Google has ever released. With verified features now publicly available, the platform is offering a clearer picture of how Google intends to redefine the developer experience.

A Breakthrough Designed for Modern Coding Challenges

Google’s new development environment introduces a design philosophy unlike anything the company has released before. Instead of focusing on predictive text or code suggestions, the platform revolves around autonomous agents that can complete end-to-end tasks. This approach represents a major leap from traditional IDEs, which rely heavily on manual input for every step of the development cycle.

The platform includes a structured editor, a dedicated agent manager, and a workspace that allows AI to run commands, analyze projects, and interact with tools inside the environment. Developers remain in control, but the IDE reduces repetitive workload by allowing agents to take over tasks that typically require time-consuming manual execution.

The broader goal is clear: enable creators to design solutions while the system handles operational steps that often slow production.

Why This Platform Matters Right Now

The timing of this release reflects Google’s strategy to push AI deeper into practical, daily workflows. For years, developers have relied on tools that offered suggestions or helped fill in code blocks. However, as applications become more complex and as teams manage tighter development schedules, incremental tools aren’t enough. Google’s new environment responds to these challenges by elevating AI from a passive helper to an active collaborator.

This shift aligns with major advancements in automated reasoning, tool control, and command-execution models—capabilities that now allow AI to handle more of the development pipeline. The release provides developers with access to a tool that doesn’t just accelerate tasks but can assist in structuring, testing, and analyzing entire projects.

How the Platform’s Agent System Works

The agent system is the foundation of the new IDE. It replaces the pattern of developers manually completing long lists of tasks with a model where they delegate work to specialized AI units. The system can spawn different types of agents depending on the instruction, but the general workflow follows a consistent structure:

1. The developer defines the task

The task can be simple, such as updating a file, or more complex, like configuring a multi-page web application. Instructions are written in natural language, which the system converts into actionable steps.

2. The agents take over operations

Once a task is assigned, the platform launches the appropriate agents. These agents may:

- Draft new code

- Analyze the existing project

- Execute test commands

- Launch a virtual browser window

- Refactor files

- Validate UI output

- Provide detailed reports of all actions taken

These processes occur within the IDE itself, allowing developers to monitor activity firsthand.

3. A transparent record is created

A central goal of the design is trustworthiness. For that reason, each agent must produce a complete artifact trail. These records may include terminals, logs, lists of completed actions, or screenshots. All artifacts remain inside the IDE, giving developers the ability to review every step before moving forward.

4. The developer reviews and adjusts

During review, the developer may accept results, refine instructions, or trigger additional tasks. Oversight remains essential, but the AI handles much of the repetitive work that developers normally must do manually.

Key Features That Stand Out

The system introduces several components that separate it from the tools most developers are accustomed to:

Agent Manager

This component acts as the control center for all automated tasks. Developers can see pending assignments, completed jobs, and agent activity in real time.

Environment Integration

The platform incorporates a full terminal and browser simulation. Agents can run code, execute commands, and inspect web applications inside the environment without relying on external tools.

Artifact-Based Verification

Instead of returning simple text responses, each agent produces tangible results. This system helps users identify mistakes, understand execution paths, and maintain accountability.

Cross-Platform Support

The platform runs on Windows, macOS, and Linux, making it accessible for nearly all development teams across the United States.

Growing Developer Interest and Early Impressions

Although the platform remains in its preview phase, the initial reaction has been overwhelmingly positive. Developers appreciate that the environment bridges a longstanding gap: the need for AI tools that not only write code but also perform the procedural tasks required to validate and test that code.

Early testing shows notable gains in:

- Time savings during setup and configuration

- Error reduction in repetitive file updates

- Faster testing cycles

- Easier iteration for multi-step tasks

- Improved clarity during team collaboration

Some users have also praised the platform for improving the overall educational experience for new programmers. When agents generate artifacts, beginners can see the exact sequence of steps required to complete certain tasks, providing clearer guidance than static examples.

The Level of Autonomy in Real Use Cases

In preview tests, agents have demonstrated the ability to handle a range of advanced operations. These include:

- Creating new project structures

- Generating multi-file components

- Debugging code through iterative testing

- Designing UI elements and validating their behavior

- Simulating complex interactions in browser previews

- Managing package installations and environment updates

During these operations, developers maintain complete oversight. The system never operates without being triggered by the user, ensuring safety and compliance remain top priorities.

Where This Technology Fits in the Evolving AI Landscape

The release of this IDE fits into a larger movement toward agent-powered ecosystems. Many companies have introduced AI assistants that offer code suggestions or answer questions. However, Google’s approach focuses on autonomy, transparency, and full project interaction.

This positions the platform as a pioneer in what many experts believe will become the next phase of development tools: environments that execute tasks, think through processes, and manage multi-step workflows with minimal friction.

For companies in the U.S. looking to modernize operations or shorten development timelines, these capabilities may significantly reshape their strategies over the next few years.

Important Considerations for Developers

Although the system brings transformative benefits, developers should be aware of a few practical realities during the preview period.

It is still evolving

The preview version may contain incomplete features or integrations that will expand over time.

Cost management remains important

The IDE links directly to Google’s model platform, which uses token-based pricing. Larger builds or long testing cycles can increase usage if not monitored.

Human oversight is essential

Even with advanced agents, developers must validate the output. This becomes especially important for applications involving security, privacy, or financial operations.

Feature expansion is ongoing

Google is expected to roll out updates as feedback comes in from the preview community.

How Users in the United States Can Get Started

Developers in the U.S. can immediately access the platform through the public preview. The steps to get started are straightforward:

- Install the IDE on a preferred operating system

- Log in through a Google account

- Connect to the appropriate AI model tier

- Open or import a project workspace

- Begin testing the agent workflows with natural-language instructions

- Evaluate artifacts and refine tasks as needed

As the platform evolves, users are encouraged to continue exploring its features and testing new workflows that may reduce friction in their projects.

Looking Ahead: What This Signals for the Future of Development

The introduction of this platform signals a significant shift in how software will be created in the coming years. Instead of focusing solely on manual typing or predictive suggestions, the future is heading toward intelligent automation integrated directly into the development environment.

Google’s tool demonstrates that AI can meaningfully support developers while still providing transparency, oversight, and accountability. The combination of agent-driven processing, artifact verification, and multi-environment integration represents what many consider the next major chapter in software engineering.

For U.S. developers, this is an opportunity to adopt emerging technology early, experiment with autonomous workflows, and prepare for an industry that increasingly blends human and AI collaboration.

Tell us your thoughts below—do agent-powered tools fit into your development workflow, or are you waiting to see where the technology goes next?